高质量静态稳定的TAA改进

在被TAA中的静态Flicking折磨了两个月后,终于决定花一些来时间来修复这些问题。

最后给出源码。

目标

- 高质量的采样,理想情况下比拟16xSSAA。

- 精确的Clamp,不能出现一点残影。

- 静态稳定性,大量减少静态场景的Flickering。

- 最好的性能,不引入额外的RT。

- 锐利的输出。

实现目标#1

对于目标1,16x的SSAA意味着TAA的抖动周期至少为16帧。但通常来说,越大的帧周期,画面稳定性越差,并且对帧率的要求更高。

因此,直接设Halton序列的周期为16是比较好的选择。

实现目标#2

我测试了很多Clamp方法,目前最优秀的还是AMD的Variance Clamp做法,他们在算方差前就提前做好了Tonemapper, 最后Clamp出来的效果非常的准确。

实现目标#3和#4

我大量的时间都花在这里了,所以会更加详细的说明思路。

静态Flickering是因为Halton序列随机抖动导致远处微小几何体在随机某一帧光栅化,断断续续的,因此很容易被History Clamp剔除掉贡献,然后就会出现静态的Flicking。

治标做法是给场景做好网格LOD。

治本做法,标记出这些Flickering的像素,将它们锁住,并增加它们在Clamp时Box的Size,或者根本就不Clamp。

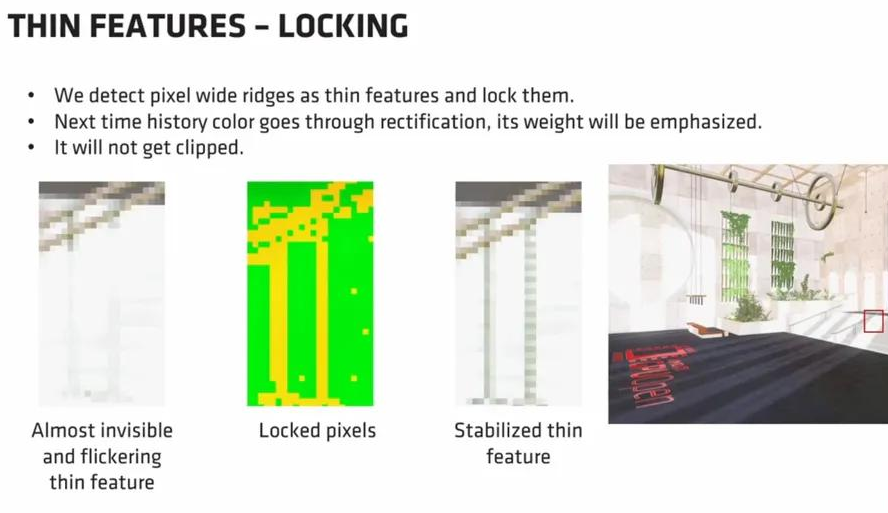

这种做法其实来自AMD的FSR2.0:

思路很清晰但做起来付出的代价却很高。

首先排除LockPixel自己的RT消耗,我们如果要准确标记出Lock Pixel,我们得确保满足如下条件:

- 这部分像素在上一帧和这一帧没有移动(速度为0)。

- 这部分像素的深度和历史帧深度存在突变。

- 这部分像素的亮度和历史帧亮度存在突变。

条件#1确保静态的像素才会被锁住。由于TAA每帧在一个像素内Jitter,所以判断两帧内是否移动,得采样3x3范围内的速度缓冲做判断,并且还得保持历史帧速度缓冲。

条件#2和条件#3同样得在3x3范围内做,并且这个突变的范围需要设置得非常的微妙,需要耐心调整。

我反正是做着做着要吐了。

我最终的做法:

其实调整BlendFactor为一个较低的值可以很好的抑制闪烁。但它会带来拖影。

所以在History.w中存入一个Lerp Factor,根据速度来调整clamp的box size。

这样,不会引入任何额外的RT。

实现目标#5

AMD的CAS是最好的锐化算法了,用在这里非常合适。

我这边实现了LDS优化的版本。一起放在代码里。细节详见如下:

#Pass 0: TAAMain:

#version 460

#include "Common.glsl"

// Temporal Anti-Alias

#define LOCAL_SIZE_XY 16

layout (local_size_x = LOCAL_SIZE_XY, local_size_y = LOCAL_SIZE_XY, local_size_z = 1) in;

#define VIEW_DATA_SET 0

#include "ViewData.glsl"

#define FRAME_DATA_SET 2

#include "FrameData.glsl"

layout (set = 1, binding = 0, rgba16f) uniform image2D outHdrColor;

layout (set = 1, binding = 1) uniform sampler2D inDepth;

layout (set = 1, binding = 2) uniform sampler2D inHistory;

layout (set = 1, binding = 3) uniform sampler2D inVelocity;

layout (set = 1, binding = 4) uniform sampler2D inHdrColor;

struct TAAPushConstant

{

uint firstRender;

uint camMove;

};

layout(push_constant) uniform block

{

TAAPushConstant pushConstant;

};

bool IsFirstFrame()

{

return pushConstant.firstRender != 0;

}

bool IsCamMove()

{

return pushConstant.camMove != 0;

}

// TAA random offset within one pixel range.

// so use 3x3 tap to get safe value.

const ivec2 kPattern3x3[9] = {

ivec2(-1,-1),

ivec2(-1, 0),

ivec2(-1, 1),

ivec2( 0, 1),

ivec2( 0, 0),

ivec2( 0,-1),

ivec2( 1, 1),

ivec2( 1, 0),

ivec2( 1,-1)

};

const float kRpc9 = 1.0f / 9.0f;

// keep 1 pixel border for lds, to keep edge tap safe.

const int kBorderSize = 1;

const int kGroupSize = LOCAL_SIZE_XY;

const int kLdsLength = kGroupSize + kBorderSize * 2;

const int kLdsArea = kLdsLength * kLdsLength;

const float kTinyFloat = 1e-8f;

const float kMaxFloat16 = 32767.0f;

const float kMaxFloat16u = 65535.0f;

shared vec3 sharedColor[kLdsLength][kLdsLength];

shared float sharedDepth[kLdsLength][kLdsLength];

vec3 reinhard(vec3 hdr)

{

return hdr / (hdr + 1.0f);

}

vec3 reinhardInverse(in vec3 sdr)

{

return sdr / max(1.0f - sdr, 1e-5f);

}

vec3 ldsLoadColor(ivec2 GId)

{

GId += ivec2(kBorderSize);

return sharedColor[GId.x][GId.y];

}

// store color with reinhard tonemmaper.

void ldsStoreColor(ivec2 GId, vec3 color)

{

sharedColor[GId.x][GId.y] = reinhard(color);

}

float ldsLoadDepth(ivec2 GId)

{

GId += ivec2(kBorderSize);

return sharedDepth[GId.x][GId.y];

}

void ldsStoreDepth(ivec2 GId, float depth)

{

sharedDepth[GId.x][GId.y] = depth;

}

float luminance(vec3 color)

{

return max(dot(color, vec3(0.299f, 0.587f, 0.114f)), 1e-5f);

}

void storeColorDepth(ivec2 GId, ivec2 TId, ivec2 size)

{

TId = clamp(TId, ivec2(0,0), size - ivec2(1,1));

// store color.

ldsStoreColor(GId, texelFetch(inHdrColor, TId, 0).rgb);

// store linear z.

float linearZ = linearizeDepth(texelFetch(inDepth, TId, 0).r, viewData.camInfo.z, viewData.camInfo.w);

ldsStoreDepth(GId, linearZ);

}

void prepareLds(ivec2 topLeft, ivec2 workSize, int groupIndex)

{

// 4 sample per pixel.

if(groupIndex < (kLdsArea >> 2)) // 1 / 4 area pixel work.

{

// sample [0, 0]

ivec2 id0 = ivec2(

groupIndex % kLdsLength,

groupIndex / kLdsLength);

storeColorDepth(id0, topLeft + id0, workSize);

// sample [0.25, 0.25]

ivec2 id1 = ivec2(

(groupIndex + (kLdsArea >> 2)) % kLdsLength,

(groupIndex + (kLdsArea >> 2)) / kLdsLength);

storeColorDepth(id1, topLeft + id1, workSize);

// sample [0.5, 0.5]

ivec2 id2 = ivec2(

(groupIndex + (kLdsArea >> 1)) % kLdsLength,

(groupIndex + (kLdsArea >> 1)) / kLdsLength);

storeColorDepth(id2, topLeft + id2, workSize);

// sample [0.75, 0.75]

ivec2 id3 = ivec2(

(groupIndex + kLdsArea * 3 / 4) % kLdsLength,

(groupIndex + kLdsArea * 3 / 4) / kLdsLength);

storeColorDepth(id3, topLeft + id3, workSize);

}

}

// linear z cloest test.

void depthGetClosest(ivec2 pos, inout float cloestDepth, inout ivec2 cloestPos)

{

float d = ldsLoadDepth(pos);

if(d < cloestDepth)

{

cloestDepth = d;

cloestPos = pos;

}

}

// 3x3 tap get closet pos depth.

float velocitySample3x3Closest(ivec2 groupPos, ivec2 topLeft, out vec2 velocity)

{

float minDepth = 1.0f;

ivec2 minPos = groupPos;

depthGetClosest(groupPos + kPattern3x3[0], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[1], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[2], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[3], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[4], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[5], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[6], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[7], minDepth, minPos);

depthGetClosest(groupPos + kPattern3x3[8], minDepth, minPos);

velocity = texelFetch(inVelocity, topLeft + minPos, 0).xy;

return minDepth;

}

// catmull Rom 9 tap sampler.

// sTex: linear clamp sampler2D.

// uv: sample uv.

// resolution: working rt resolution.

vec3 catmullRom9Sample(sampler2D sTex, vec2 uv, vec2 resolution)

{

vec2 samplePos = uv * resolution;

vec2 texPos1 = floor(samplePos - 0.5f) + 0.5f;

vec2 f = samplePos - texPos1;

vec2 w0 = f * (-0.5f + f * (1.0f - 0.5f * f));

vec2 w1 = 1.0f + f * f * (-2.5f + 1.5f * f);

vec2 w2 = f * (0.5f + f * (2.0f - 1.5f * f));

vec2 w3 = f * f * (-0.5f + 0.5f * f);

vec2 w12 = w1 + w2;

vec2 offset12 = w2 / (w1 + w2);

vec2 texPos0 = texPos1 - 1.0f;

vec2 texPos3 = texPos1 + 2.0f;

vec2 texPos12 = texPos1 + offset12;

texPos0 /= resolution;

texPos3 /= resolution;

texPos12 /= resolution;

vec3 result = vec3(0.0f);

result += textureLod(sTex, vec2(texPos0.x, texPos0.y), 0).xyz * w0.x * w0.y;

result += textureLod(sTex, vec2(texPos12.x, texPos0.y), 0).xyz * w12.x * w0.y;

result += textureLod(sTex, vec2(texPos3.x, texPos0.y), 0).xyz * w3.x * w0.y;

result += textureLod(sTex, vec2(texPos0.x, texPos12.y), 0).xyz * w0.x * w12.y;

result += textureLod(sTex, vec2(texPos12.x, texPos12.y), 0).xyz * w12.x * w12.y;

result += textureLod(sTex, vec2(texPos3.x, texPos12.y), 0).xyz * w3.x * w12.y;

result += textureLod(sTex, vec2(texPos0.x, texPos3.y), 0).xyz * w0.x * w3.y;

result += textureLod(sTex, vec2(texPos12.x, texPos3.y), 0).xyz * w12.x * w3.y;

result += textureLod(sTex, vec2(texPos3.x, texPos3.y), 0).xyz * w3.x * w3.y;

return max(result, vec3(0.0f));

}

void main()

{

ivec2 workSize = textureSize(inHdrColor, 0).xy;

ivec2 topLeft = ivec2(gl_WorkGroupID.xy) * kGroupSize - kBorderSize;

ivec2 groupPos = ivec2(gl_LocalInvocationID.xy);

int groupIndex = int(gl_LocalInvocationIndex);

prepareLds(topLeft, workSize, groupIndex);

groupMemoryBarrier();

barrier();

ivec2 pixelPos = ivec2(gl_GlobalInvocationID.xy);

if (pixelPos.x >= workSize.x || pixelPos.y >= workSize.y)

{

return;

}

vec2 texelSize = 1.0f / vec2(workSize);

vec2 uv = (vec2(pixelPos) + vec2(0.5)) * texelSize;

// get cloest velocity.

vec2 velocity;

const float cloestDepth = velocitySample3x3Closest(groupPos, topLeft, velocity);

const bool bSky = cloestDepth <= BG_DEPTH;

// reproject uv.

vec2 reprojectedUV = uv - velocity;

float velocityLerp = 0.0f;

if(!IsFirstFrame())

{

velocityLerp = texture(inHistory, reprojectedUV).w;

}

const float ideaStaticBoxSize = 2.5f;

float staticBoxSize = IsCamMove() ? ideaStaticBoxSize : mix(0.5f, ideaStaticBoxSize, velocityLerp);

float boxSize = mix(0.5f, staticBoxSize, bSky ? 0.0f : smoothstep(0.02f, 0.0f, length(velocity)));

// in center color.

vec3 colorIn = ldsLoadColor(groupPos);

// sample history color.

vec3 colorHistory = catmullRom9Sample(inHistory, reprojectedUV, vec2(workSize));

colorHistory = reinhard(colorHistory);

// variance clamp.

vec3 clampHistory;

{

float wsum = 0.0f;

vec3 vsum = vec3(0.0f, 0.0f, 0.0f);

vec3 vsum2 = vec3(0.0f, 0.0f, 0.0f);

for (int y = -1; y <= 1; ++y)

{

for (int x = -1; x <= 1; ++x)

{

const vec3 neigh = ldsLoadColor(groupPos + ivec2(x, y));

const float w = exp(-0.75f * (x * x + y * y));

vsum2 += neigh * neigh * w;

vsum += neigh * w;

wsum += w;

}

}

const vec3 ex = vsum / wsum;

const vec3 ex2 = vsum2 / wsum;

const vec3 dev = sqrt(max(ex2 - ex * ex, 0.0f));

vec3 nmin = ex - dev * boxSize;

vec3 nmax = ex + dev * boxSize;

clampHistory = clamp(colorHistory, nmin, nmax);

}

// when camera move, use this.

// when camera don't move, use more bigger blend factor if motion factor check.

const float ideaLerpFactor = 0.01f;

float blendFactor = ideaLerpFactor;

{

const float threshold = 0.5f;

const float base = 0.5f;

const float gather = 0.1666f;

// subpixel flicker reduce

float depth = linearizeDepth(cloestDepth, viewData.camInfo.z, viewData.camInfo.w);

float texelVelMag = length(velocity * vec2(workSize)) * depth;

float subpixelMotion = clamp(threshold / (texelVelMag + kTinyFloat), 0.0f, 1.0f);

// something moveing

float dynamicBlendFactor = texelVelMag * base + subpixelMotion * gather;

// lumiance bias correct.

float luminanceHistory = luminance(clampHistory);

float luminanceCurrent = luminance(colorIn);

float unbiasedDifference = abs(luminanceCurrent - luminanceHistory) / ((max(luminanceCurrent, luminanceHistory) + 0.3));

dynamicBlendFactor *= 1.0 - unbiasedDifference;

// clamp

dynamicBlendFactor = clamp(dynamicBlendFactor, 0.0f, 0.4f);

float lerpFactor = length(velocity * vec2(workSize)) * 5.0f;

lerpFactor = clamp(lerpFactor, 0, 1);

blendFactor = bSky ? blendFactor : mix(blendFactor, dynamicBlendFactor, lerpFactor);

// tiny move, so reset lerp factor.

velocityLerp = lerpFactor > 0.01f ? 0 : velocityLerp;

// mix lerp factor by frames to get a good clip value.

velocityLerp = mix(velocityLerp, 1.0f, ideaLerpFactor);

}

vec3 colorResolve = mix(clampHistory, colorIn, blendFactor);

// half16 safe clamp.

colorResolve = min(vec3(65504.0f), colorResolve);

imageStore(outHdrColor, ivec2(gl_GlobalInvocationID.xy), vec4(colorResolve, velocityLerp));

}

Pass#1 TAASharpen:

#version 460

#define LOCAL_SIZE_XY 16

layout (local_size_x = LOCAL_SIZE_XY, local_size_y = LOCAL_SIZE_XY, local_size_z = 1) in;

// out

layout (set = 0, binding = 0,rgba16f) uniform image2D hdrImage;

// in

layout (set = 0, binding = 1,rgba16f) uniform image2D historyImage;

layout (set = 0, binding = 2,rgba16f) uniform image2D inTAAImage;

struct TAASharpenPushConstant

{

uint sharpenMethod;

float sharpness;

};

layout(push_constant) uniform block

{

TAASharpenPushConstant pushConstant;

};

// same with cpp.

#define SHARPEN_OFF 0

// Bloom flicking.

#define SHARPEN_RESPONSIVE 1

// Bloom stable.

#define SHARPEN_CAS 2

const int kBorderSize = 1;

const int kGroupSize = LOCAL_SIZE_XY;

const int kLdsLength = kGroupSize + kBorderSize * 2;

const int kLdsArea = kLdsLength * kLdsLength;

shared vec4 sharedColor[kLdsLength][kLdsLength];

vec4 ldsLoadColor(ivec2 GId)

{

GId += ivec2(kBorderSize);

return sharedColor[GId.x][GId.y];

}

void ldsStoreColor(ivec2 GId, vec4 color)

{

sharedColor[GId.x][GId.y] = color;

}

void storeColor(ivec2 GId, ivec2 TId, ivec2 size)

{

TId = clamp(TId, ivec2(0,0), size - ivec2(1,1));

// store color.

ldsStoreColor(GId, imageLoad(inTAAImage, TId));

}

void prepareLds(ivec2 topLeft, ivec2 workSize, int groupIndex)

{

// 4 sample per pixel.

if(groupIndex < (kLdsArea >> 2)) // 1 / 4 area pixel work.

{

// sample [0, 0]

ivec2 id0 = ivec2(

groupIndex % kLdsLength,

groupIndex / kLdsLength);

storeColor(id0, topLeft + id0, workSize);

// sample [0.25, 0.25]

ivec2 id1 = ivec2(

(groupIndex + (kLdsArea >> 2)) % kLdsLength,

(groupIndex + (kLdsArea >> 2)) / kLdsLength);

storeColor(id1, topLeft + id1, workSize);

// sample [0.5, 0.5]

ivec2 id2 = ivec2(

(groupIndex + (kLdsArea >> 1)) % kLdsLength,

(groupIndex + (kLdsArea >> 1)) / kLdsLength);

storeColor(id2, topLeft + id2, workSize);

// sample [0.75, 0.75]

ivec2 id3 = ivec2(

(groupIndex + kLdsArea * 3 / 4) % kLdsLength,

(groupIndex + kLdsArea * 3 / 4) / kLdsLength);

storeColor(id3, topLeft + id3, workSize);

}

}

float min3x(float a, float b, float c)

{

return min(min(a, b), c);

}

float max3x(float a, float b, float c)

{

return max(max(a, b), c);

}

vec3 reinhardInverse(in vec3 sdr)

{

return sdr / max(1.0f - sdr, 1e-5f);

}

vec3 RGBToYCoCg(in vec3 rgb)

{

return vec3(

0.25f * rgb.r + 0.5f * rgb.g + 0.25f * rgb.b,

0.5f * rgb.r - 0.5f * rgb.b,

-0.25f * rgb.r + 0.5f * rgb.g - 0.25f * rgb.b

);

}

vec3 YCoCgToRGB(in vec3 yCoCg)

{

return vec3(

yCoCg.x + yCoCg.y - yCoCg.z,

yCoCg.x + yCoCg.z,

yCoCg.x - yCoCg.y - yCoCg.z

);

}

vec3 ApplySharpening(ivec2 groupPos)

{

const vec3 top = ldsLoadColor(groupPos + ivec2( 0, 1)).xyz;

const vec3 left = ldsLoadColor(groupPos + ivec2( 1, 0)).xyz;

const vec3 center = ldsLoadColor(groupPos + ivec2( 0, 0)).xyz;

const vec3 right = ldsLoadColor(groupPos + ivec2(-1, 0)).xyz;

const vec3 bottom = ldsLoadColor(groupPos + ivec2( 0, -1)).xyz;

vec3 result = RGBToYCoCg(center);

float unsharpenMask = 4.0f * result.x;

unsharpenMask -= RGBToYCoCg(top).x;

unsharpenMask -= RGBToYCoCg(bottom).x;

unsharpenMask -= RGBToYCoCg(left).x;

unsharpenMask -= RGBToYCoCg(right).x;

result.x = min(result.x + 0.25f * unsharpenMask, 1.1f * result.x);

return YCoCgToRGB(result);

}

// AMD CAS Filter for sharpen.

vec3 AMDCASFilter(float sharpness, ivec2 groupPos)

{

ivec2 pixelPos = ivec2(gl_GlobalInvocationID.xy);

// a b c

// d e f

// g h i

vec3 a = ldsLoadColor(groupPos + ivec2(-1, -1)).xyz;

vec3 b = ldsLoadColor(groupPos + ivec2( 0, -1)).xyz;

vec3 c = ldsLoadColor(groupPos + ivec2( 1, -1)).xyz;

vec3 d = ldsLoadColor(groupPos + ivec2(-1, 0)).xyz;

vec3 e = ldsLoadColor(groupPos + ivec2( 0, 0)).xyz;

vec3 f = ldsLoadColor(groupPos + ivec2( 1, 0)).xyz;

vec3 g = ldsLoadColor(groupPos + ivec2(-1, 1)).xyz;

vec3 h = ldsLoadColor(groupPos + ivec2( 0, 1)).xyz;

vec3 i = ldsLoadColor(groupPos + ivec2( 1, 1)).xyz;

float mnR = min3x(min3x(d.r,e.r,f.r),b.r,h.r);

float mnG = min3x(min3x(d.g,e.g,f.g),b.g,h.g);

float mnB = min3x(min3x(d.b,e.b,f.b),b.b,h.b);

float mnR2 = min3x(min3x(mnR,a.r,c.r),g.r,i.r);

float mnG2 = min3x(min3x(mnG,a.g,c.g),g.g,i.g);

float mnB2 = min3x(min3x(mnB,a.b,c.b),g.b,i.b);

mnR = mnR + mnR2;

mnG = mnG + mnG2;

mnB = mnB + mnB2;

float mxR = max3x(max3x(d.r,e.r,f.r),b.r,h.r);

float mxG = max3x(max3x(d.g,e.g,f.g),b.g,h.g);

float mxB = max3x(max3x(d.b,e.b,f.b),b.b,h.b);

float mxR2 = max3x(max3x(mxR,a.r,c.r),g.r,i.r);

float mxG2 = max3x(max3x(mxG,a.g,c.g),g.g,i.g);

float mxB2 = max3x(max3x(mxB,a.b,c.b),g.b,i.b);

mxR = mxR + mxR2;

mxG = mxG + mxG2;

mxB = mxB + mxB2;

float rcpMR = 1.0f / mxR;

float rcpMG = 1.0f / mxG;

float rcpMB = 1.0f / mxB;

float ampR = clamp(min(mnR, 2.0f - mxR) * rcpMR, 0.0f, 1.0f);

float ampG = clamp(min(mnG, 2.0f - mxG) * rcpMG, 0.0f, 1.0f);

float ampB = clamp(min(mnB, 2.0f - mxB) * rcpMB, 0.0f, 1.0f);

// Shaping amount of sharpening.

ampR = sqrt(ampR);

ampG = sqrt(ampG);

ampB = sqrt(ampB);

// Filter shape.

// 0 w 0

// w 1 w

// 0 w 0

float peak = - 1.0f / mix(8.0f, 5.0f, clamp(sharpness, 0.0f, 1.0f));

float wR = ampR * peak;

float wG = ampG * peak;

float wB = ampB * peak;

float rcpWeightR = 1.0f / (1.0f + 4.0f * wR);

float rcpWeightG = 1.0f / (1.0f + 4.0f * wG);

float rcpWeightB = 1.0f / (1.0f + 4.0f * wB);

vec3 outColor;

outColor.r = clamp((b.r * wR + d.r * wR + f.r * wR + h.r * wR + e.r) * rcpWeightR, 0.0f, 1.0f);

outColor.g = clamp((b.g * wG + d.g * wG + f.g * wG + h.g * wG + e.g) * rcpWeightG, 0.0f, 1.0f);

outColor.b = clamp((b.b * wB + d.b * wB + f.b * wB + h.b * wB + e.b) * rcpWeightB, 0.0f, 1.0f);

return outColor;

}

void main()

{

ivec2 workSize = imageSize(inTAAImage);

ivec2 topLeft = ivec2(gl_WorkGroupID.xy) * kGroupSize - kBorderSize;

ivec2 groupPos = ivec2(gl_LocalInvocationID.xy);

int groupIndex = int(gl_LocalInvocationIndex);

prepareLds(topLeft, workSize, groupIndex);

groupMemoryBarrier();

barrier();

if (gl_GlobalInvocationID.x >= workSize.x || gl_GlobalInvocationID.y >= workSize.y)

{

return;

}

// load center color, mask valid on w channel.

vec4 colorIn = ldsLoadColor(groupPos);

// cache center color.

const vec3 center = colorIn.xyz;

// history color is after reinhard tonemapper. so here reverse.

colorIn.xyz = reinhardInverse(colorIn.xyz);

// update history image.

imageStore(historyImage, ivec2(gl_GlobalInvocationID.xy), colorIn);

// out color.

vec3 color = center;

float sharpness = pushConstant.sharpness;

if(pushConstant.sharpenMethod == SHARPEN_RESPONSIVE)

{

color = ApplySharpening(groupPos);

}

else if(pushConstant.sharpenMethod == SHARPEN_CAS)

{

color = AMDCASFilter(sharpness, groupPos);

}

// out hdr color, also tonemapper reverse.

imageStore(hdrImage, ivec2(gl_GlobalInvocationID.xy), vec4(reinhardInverse(color), 1.0f));

}